Building Secure Smart Contracts

Brought to you by Trail of Bits, this repository offers guidelines and best practices for developing secure smart contracts. Contributions are welcome, you can contribute by following our contributing guidelines.

Table of Contents:

- Development Guidelines

- Code Maturity: Criteria for developers and security engineers to use when evaluating a codebase’s maturity

- High-Level Best Practices: Best practices for all smart contracts

- Incident Response Recommendations: Guidelines for creating an incident response plan

- Secure Development Workflow: A high-level process to follow during code development

- Token Integration Checklist: What to check when interacting with arbitrary tokens

- Learn EVM: Technical knowledge about the EVM

- EVM Opcodes: Information on all EVM opcodes

- Transaction Tracing: Helper scripts and guidance for generating and navigating transaction traces

- Arithmetic Checks: A guide to performing arithmetic checks in the EVM

- Yellow Paper Guidance: Symbol reference for easier reading of the Ethereum yellow paper

- Forks <> EIPs: Summaries of the EIPs included in each Ethereum fork

- Forks <> CIPs: Summaries of the CIPs and EIPs included in each Celo fork (EVM-compatible chain)

- Upgrades <> TIPs: Summaries of the TIPs included in each TRON upgrade (EVM-compatible chain)

- Forks <> BEPs: Summaries of the BEPs included in each BSC fork (EVM-compatible chain)

- Not So Smart Contracts: Examples of common smart contract issues, complete with descriptions, examples, and recommendations

- Program Analysis: Using automated tools to secure contracts

- Echidna: A fuzzer that checks your contract's properties

- Medusa: A next-gen fuzzer that checks your contract's properties

- Slither: A static analyzer with both CLI and scriptable interfaces

- Manticore: A symbolic execution engine that proves the correctness of properties

- For each tool, this training material provides:

- A theoretical introduction, an API walkthrough, and a set of exercises

- Exercises that take approximately two hours to gain practical understanding

- Resources: Assorted online resources

- Trail of Bits Blog Posts: A list of blockchain-related blog posts created by Trail of Bits

License

secure-contracts and building-secure-contracts are licensed and distributed under the AGPLv3 license. Contact us if you're looking for an exception to the terms.

List of Best Practices for Smart Contract Development

- Code Maturity: Criteria for developers and security engineers to use when evaluating a codebase’s maturity

- High-Level Best Practices: Essential high-level best practices for all smart contracts

- Token Integration Checklist: Important aspects to consider when interacting with various tokens

- Incident Response Recommendations: Guidelines on establishing an effective incident response plan

- Secure Development Workflow: A recommended high-level process to adhere to while writing code

- Preparing for a Security Review: A checklist of things to consider when preparing for a security review

Blockchain Maturity Evaluation

- Document version: 0.1.0

This document provides criteria for developers and security engineers to use when evaluating a codebase’s maturity. Deficiencies identified during this evaluation often stem from root causes within the software development life cycle that should be addressed through standardization or training and awareness programs. This document aims to push the industry towards higher quality requirements and to reduce risks associated with immature practices, such as the introduction of bugs, a broken development cycle, and technical debt.

The document can be used as a self-evaluation protocol for developers, or as an evaluation guideline for security engineers.

As technologies and tooling improve, standards and best practices evolve, and this document will be updated to reflect such progress. We invite the community to open issues to provide insights and feedback and to regularly revisit this document for new versions.

Rating system

This Codebase Maturity Evaluation uses five ratings:

- Missing: Not present / not implemented

- Weak: Several and/or significant areas of improvement have been identified.

- Moderate: The codebase follows adequate procedure, but it can be improved.

- Satisfactory: The codebase is above average, but it can be improved.

- Strong: Only small potential areas of improvement have been identified.

How are ratings determined? While the process for assigning ratings can vary due to a number of variables unique to each codebase (e.g., use cases, size and complexity of the codebase, specific goals of the audit, timeline), a general approach for determining ratings is as follows:

- If “Weak” criteria apply, “Weak” is applied.

- If none of the “Weak” criteria apply, and some “Moderate” criteria apply, “Moderate” can be applied.

- If all “Moderate” criteria apply, and some “Satisfactory” criteria apply, “Satisfactory” can be applied.

- If all “Satisfactory” criteria apply, and there is evidence of exceptional practices or security controls in place, “Strong” can be applied.

Arithmetic

Weak

A weak arithmetic maturity reflects the lack of a systematic approach toward ensuring the correctness of the operations and reducing the risks of arithmetic-related flaws such as overflow, rounding, precision loss, and trapping. Specific criteria include, but are not limited to, the following:

- No explicit overflow protection (e.g., Solidity 0.8 or SafeMath) is used, and no justification for the lack of protection exists.

- Intentional usage of unchecked arithmetic is not sufficiently documented.

- There is no specification of the arithmetic formulas, or the specification does not match the code.

- No explicit testing strategy has been identified to increase confidence in the system’s arithmetic.

- The testing does not cover critical—or several—arithmetic edge cases.

Moderate

This rating indicates that the codebase follows best practices, but lacks a systematic approach toward ensuring the correctness of the arithmetic operations. The code is well structured to facilitate the testing of operations, and multiple testing techniques are used. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Unchecked arithmetics are minimal and justified, and extra documentation has been provided.

- All overflow and underflow risks are documented and tested.

- Explicit rounding up or down is used for all operations that lead to precision loss.

- All rounding risks are documented and described in the specification.

- An automated testing technique is used for arithmetic-related code (e.g., fuzzing, formal methods).

- Arithmetic operations are structured through stateless functions to facilitate their testing.

- System parameters are bounded, the ranges are explained, and their impacts are propagated through the documentation/specification.

Satisfactory

Arithmetic-related risks are clearly identified and understood. A theoretical analysis ensures that the code is consistent with the specification. Specific criteria include, but are not limited to, the following: The system meets all moderate criteria.

- Precision loss is analyzed against a ground-truth (e.g, using an infinite-precision arithmetic library), and the loss is bounded and documented.

- All trapping operations (overflow protection, divide by zero, etc.) and their impacts are identified and documented.

- The arithmetic specification is a one-to-one match with the codebase. Each formula relevant to the white paper/specification has a respective function that is easily identifiable.

- The automated testing technique(s) cover all significant arithmetic operations and are run periodically, or ideally in the CI.

Auditing

“Auditing” refers to the proper use of events and monitoring procedures within the system.

Weak

The system has no strategy towards emitting or using events. Specific criteria include, but are not limited to, the following:

- Events are missing for critical components updates.

- There are no clear or consistent guidelines for event-emitting functions.

- The same events are reused for different purposes.

Moderate

The system is built to be monitored. An off-chain infrastructure for detecting unexpected behavior is in place, and the team can be notified about events. Clear documentation highlights how the events should be used by third parties. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Events are emitted for all critical functions.

- There is an off-chain monitoring system that logs events, and a monitoring plan has been implemented.

- The monitoring documentation describes the purpose of events, how events should be used, and their assumptions.

- The monitoring documentation describes how to review logs in order to audit a failure.

- An incident response plan describes how the protocol’s actors must react in case of failure.

Satisfactory

The system is well monitored, and processes are in place to react in case of defect or failure. Specific criteria include, but are not limited to, the following: The system meets all moderate criteria.

- The off-chain monitoring system triggers notifications and/or alarms if unexpected behavior or events occur.

- Well-defined roles and responsibilities are defined for cases where unexpected behavior or vulnerabilities are detected.

- The incident response plan is regularly tested through a cybersecurity incident response exercise.

Authentication / access controls

“Authentication / access controls” refers to the use of robust access controls to handle identification and authorization and to ensure safe interactions with the system.

Weak

The expected access controls are unclear or inconsistent; one address may be in control of the entire system, and there is no indication of additional safeguards for this account. Specific criteria include, but are not limited to, the following: No access controls are in place for privileged functions, or some privileged functions lack access controls.

- There are no differentiated privileged actors or roles.

- All privileged functions are callable by one address, and there is no indication that this address will have further access controls (e.g., multisig).

Moderate

The system adheres to best practices, the major actors are documented and tested, and risks are limited through a clear separation of privileges. Specific criteria include, but are not limited to, the following: None of the weak criteria apply to the codebase.

- All privileged functions have some form of access control.

- The principle of least privilege is followed for all components.

- There are different roles in the system, and privileges for different roles do not overlap.

- There is clear documentation about the actors and their respective privileges in the system.

- Tests cover every actor-specific privilege.

- Roles can be revoked (if applicable).

- Two-step processes are used for privileged operations performed by Externally Owned Accounts (EOA).

Satisfactory

All actors and roles are clearly documented, including their expected privileges, and the implementation is consistent with all expected behavior and thoroughly tested. All known risks are highlighted and visible to users. Specific criteria include, but are not limited to, the following:

- The system meets all moderate criteria.

- All actors and roles are well documented.

- Actors with privileges are not EOAs.

- Leakage or loss of keys from one signer or actor does not compromise the system or affect other roles.

- Privileged functions are tested against known attack vectors.

Complexity management

“Complexity management” refers to the separation of logic into functions with a clear purpose. The presence of clear structures designed to manage system complexity, including the separation of system logic into clearly defined functions, is the central focus when evaluating the system with respect to this category.

Weak

The code has unnecessary complexity (e.g., failure to adhere to well-established software development practices) that hinders automated and/or manual review. Specific criteria include, but are not limited to, the following:

- Functions overuse nested operations (if/then/else, ternary operators, etc.).

- Functions have unclear scope, or their scope include too many components.

- Functions have unnecessary redundant code/code duplication.

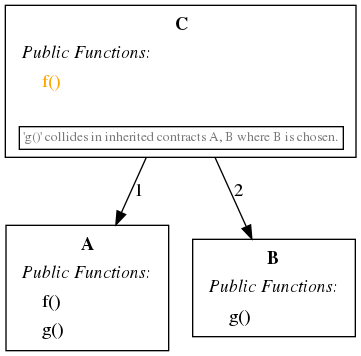

- Contracts have a complex inheritance tree.

Moderate

The most complex parts of the codebase are well identified, and their complexity is reduced as much as possible. Specific criteria include, but are not limited to, the following: None of the weak criteria apply to the codebase.

- Functions have a high cyclomatic complexity (≥11).

- Critical functions are well scoped, making them easy to understand and test.

- Redundant code in the system is limited and justified.

- Inputs and their expected values are clear, and validation is performed where necessary.

- A clear and documented naming convention is in place for functions, variables, and other identifiers, and the codebase clearly adheres to the convention.

- Types are not used to enforce correctness.

Satisfactory

The code has little or no unnecessary complexity, any necessary complexity is well documented, and all code is easy to test. Specific criteria include, but are not limited to, the following:

- The system meets all moderate criteria.

- Each function has a specific and clear purpose and is clearly documented.

- Core functions are straightforward to test via unit tests or automated testing.

- There is no redundant behavior.

Decentralization

“Decentralization” refers to the presence of a decentralized governance structure for mitigating insider threats and managing risks posed by privileged actors. Decentralization is not required to have a mature smart contract codebase, and a project that does not claim to be decentralized might not fit within this category. However, if a single point of failure exists, it must be clearly identified and proper protections must be put in place.

A note on upgradeability: Upgradeability is often an important feature to consider when reviewing the decentralization of a system. While upgradeability is not, at a fundamental or theoretical level, incompatible with decentralization, it is, in practice, an obstacle in realizing robust system decentralization. Upgradeable systems that aim to be decentralized have additional requirements to demonstrate that their upgradeable components do not impact their decentralization.

Weak

The system has several points of centrality that may not be clearly visible to the users. Specific criteria include, but are not limited to, the following:

- Critical functionalities are upgradable by a single entity (e.g., EOA, multisig, DAO), and an arbitrary user cannot opt out from the upgrade or exit the system before the upgrade is triggered.

- A single entity is in direct control of user funds.

- All decision making is controlled by a single entity.

- System parameters can be changed at any time by a single entity.

- Permission/authorization by a centralized actor is required to use the contracts.

Moderate

Centralization risks are identified, justified and documented, and users might choose to not follow an upgrade. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Risks related to trusted parties (if any) are clearly documented.

- Users have a documented path to opt out of upgrades or exit the system, or upgradeability is present only for non-critical features.

- Privileged actors are not able to unilaterally move funds out of, or trap funds in, the protocol.

- All privileges are documented.

Satisfactory

The system provides clear justification to demonstrate its path toward decentralization. Specific criteria include, but are not limited to the following:

- The system meets all moderate criteria.

- The system does not rely on on-chain voting for critical updates, or it is demonstrated that the on-chain voting does not have centralization risks. On-chain voting systems tend to have hidden centralized points and require careful consideration.

- Deployment risks are documented.

- Risks related to external contract interactions are documented.

- The critical configuration parameters are immutable once deployed, or the users have a documented path to opt out of the changes or exit the system if they are updated.

Documentation

“Documentation” refers to the presence of comprehensive and readable codebase documentation, including inline code comments, the roles and responsibilities of system entities, system invariants, use cases, expected system behavior, and data flow diagrams.

Weak

Minimal documentation is present, or documentation is clearly incomplete or outdated. Specific criteria include, but are not limited to, the following:

- There is only a high-level description of the system.

- Code comments do not match the documentation.

- Documentation is not publicly available. (Note that this applies only to codebases meant for general public usage.)

- Documentation depends directly on a set of artificial terms or words that are not clearly explained.

Moderate

The documentation adheres to best practices. Important components are documented; the documentation exists at different levels (such as inline code comments, NatSpec, and system documentation); and there is consistency across all documentation. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Documentation is written in a clear manner, and the language is not ambiguous.

- A glossary of terms exists for business-specific words and phrases.

- The architecture is documented through diagrams or similar constructs.

- Documentation includes user stories.

- Documentation clearly identifies core/critical components, such as those that significantly affect the system and/or its users.

- Reading documentation is sufficient to understand the expected behavior of the system without delving into specific implementation details.

- All critical functions are documented.

- All critical code blocks are documented.

- Known risks and system limitations are documented.

Satisfactory

Thorough documentation exists spanning all of the areas required for a moderate rating, as well as system corner cases, detailed aspects of users stories, and all features. The documentation matches the code. Specific criteria include, but are not limited to, the following:

- The system meets all moderate criteria.

- The user stories cover all user operations.

- There are detailed descriptions of the expected system behaviors.

- The implementation is consistent with the specification; if there are deviations from the specification, they are strongly justified, thoroughly explained, and reasonable.

- Function and system invariants are clearly defined in the documentation.

- Consistent naming conventions are followed throughout the codebase and documentation.

- There is specific documentation for end-users and for developers.

Transaction ordering risks

“Transaction ordering risks” refers to the resilience against malicious ordering of the transactions. This includes toxic forms of Miner Extractable Value (MEV), such as front-running, sandwiching, forced liquidations, and oracle attacks.

Weak

There are unexpected/undocumented risks that arise due to the ordering of transactions. Specific criteria include, but are not limited to, the following:

- Transaction ordering risks are not clearly identified or documented.

- Protocols or user assets are at risk of unexpected transaction ordering.

- The system relies on unjustified constraints to prevent MEV extraction.

- The system makes unproven assumptions about which attributes may or may not be manipulatable by an MEV extractor.

Moderate

Risks related to transaction ordering are identified and, when applicable, limited through on-chain mitigations. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Transaction ordering risks related to user operations are limited, justified, and documented.

- If MEV is inherent to the protocol, reasonable mitigations, such as time delays and slippage checks, are in place.

- The testing strategy emphasizes transaction ordering risks.

- The system uses tamper-resistant oracles.

Satisfactory

All transaction ordering risks are documented and clearly justified. The known risks are highlighted through documentation and tests and are visible to the users. Specific criteria include, but are not limited to, the following:

- The system meets all moderate criteria.

- The documentation centralizes all known MEV opportunities.

- Transactions ordering risks on privileged operations (e.g., system updates) are limited, justified, and documented.

- Known transaction ordering opportunities have tests highlighting the underlying risks.

Low-level manipulation

“Low-level manipulation” refers to the usage of low-level operations (e.g., assembly code, bitwise operations, low-level calls) and relevant justification within the codebase.

Weak

The code uses unjustified low-level manipulations. Specific criteria include, but are not limited to, the following:

- Usage of assembly code or low-level manipulation is not justified; most can likely be replaced by high-level code.

Moderate

Low level operations are justified and limited. Extra documentation and testing is provided for them. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Use of assembly code is limited and justified.

- Inline code comments are present for each assembly operation.

- The code does not re-implement well-established, low-level library functionality without justification (e.g., OZ’s SafeERC20).

- A high-level implementation reference exists for each function with complex assembly code.

Satisfactory

Thorough documentation, justification, and testing exists to increase confidence in all usage of assembly code and low-level manipulation. Implementations are validated with automated testing against a reference implementation. Specific criteria include, but are not limited to, the following:

- The system meets all moderate criteria.

- Differential fuzzing, or a similar technique, is used to compare the high-level reference implementation against its low level counterpart.

- Risks related to compiler optimization or experimental features are identified and justified.

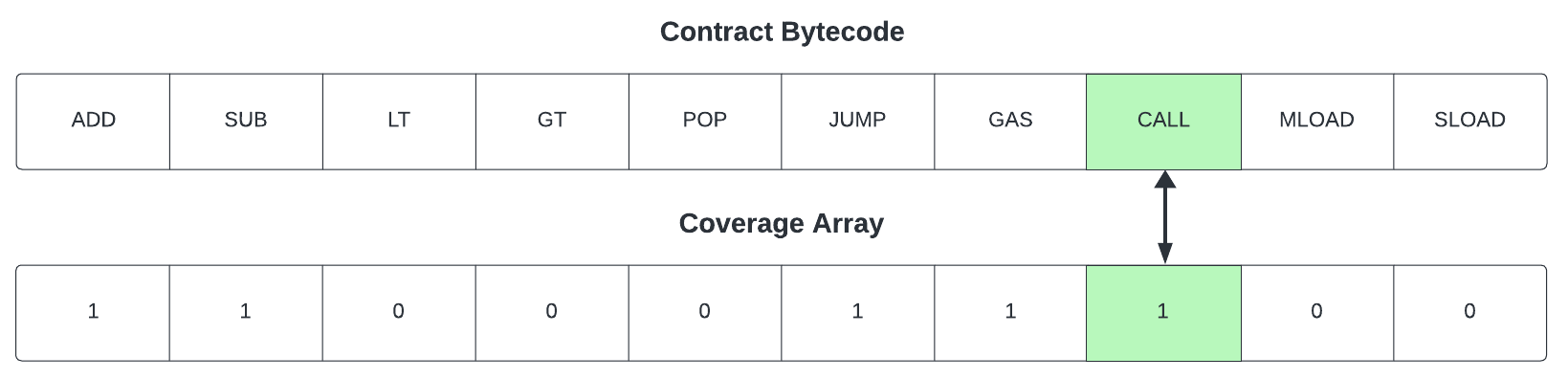

Testing and verification

“Testing and verification” refers to the robustness of testing procedures of techniques (including unit tests, integration tests, fuzzing, and symbolic execution) as well as the amount of test coverage.

Weak

Testing is limited and covers only some of the “happy paths.” Specific criteria include, but are not limited to, the following:

- Common or expected use cases are not fully tested.

- Provided tests fail for the codebase.

- There is insufficient or non-existent documentation to run the test suite “out of the box.”

Moderate

Testing adheres to best practices and covers a large majority of the code. An automated testing technique is used to increase the confidence of the most critical components. Specific criteria include, but are not limited to, the following:

- None of the weak criteria apply to the codebase.

- Most functions, including normal use cases, are tested.

- All provided tests for the codebase pass.

- Code coverage is used for the unit tests, and the report is easy to retrieve.

- An automated testing technique is used for critical components.

- Testing is implemented as part of the CI/CD pipeline.

- Integration tests are implemented, if applicable.

- Test code follows best practices and does not trigger warnings by the compiler or static analysis tools.

Satisfactory

Testing is clearly an important part of codebase development. Tests include unit tests and end-to-end testing. Code properties are clearly identified and validated with an automated testing technique. Specific criteria include, but are not limited to, the following:

- The system meets all moderate criteria.

- The codebase reaches 100% of reachable branch and statement coverage in unit tests.

- An end-to-end automated testing technique is used and all users' entry points are covered.

- Test cases are isolated and do not depend on each other or on the execution order, unless justified.

- Mutant testing is used to detect missing or incorrect tests/properties.

Development Guidelines

Follow these high-level recommendations to build more secure smart contracts.

Design Guidelines

Discuss the design of the contract ahead of time, before writing any code.

Documentation and Specifications

Write documentation at different levels and update it as you implement the contracts:

- A plain English description of the system, describing the contracts' purpose and any assumptions about the codebase.

- Schema and architectural diagrams, including contract interactions and the system's state machine. Use Slither printers to help generate these schemas.

- Thorough code documentation. Use the Natspec format for Solidity.

On-chain vs Off-chain Computation

- Keep as much code off-chain as possible. Keep the on-chain layer small. Pre-process data off-chain in a way that simplifies on-chain verification. Need an ordered list? Sort it off-chain, then check its order on-chain.

Upgradeability

Refer to our blog post for different upgradeability solutions. If you are using delegatecall to achieve upgradability, carefully review all items of the delegatecall proxy guidance. Decide whether or not to support upgradeability before writing any code, as this decision will affect your code's structure. Generally, we recommend:

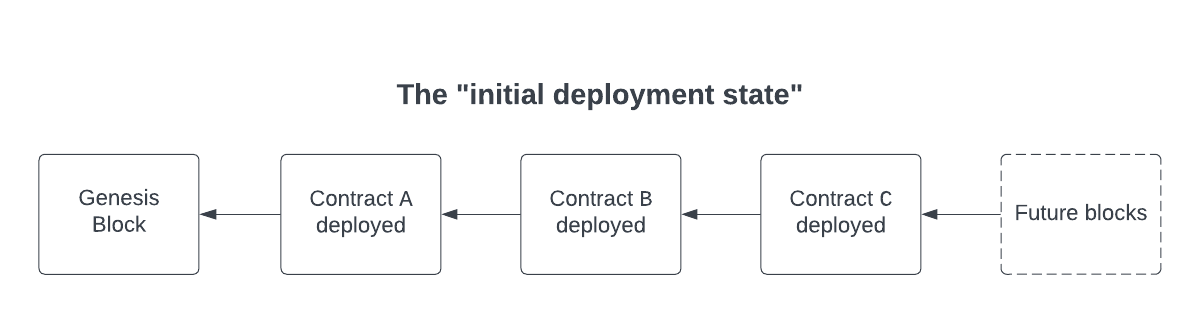

- Favoring contract migration over upgradeability. Migration systems offer many of the same advantages as upgradeable systems but without their drawbacks.

- Using the data separation pattern instead of the delegatecall proxy pattern. If your project has a clear abstraction separation, upgradeability using data separation will require only a few adjustments. The delegatecall proxy is highly error-prone and demands EVM expertise.

- Document the migration/upgrade procedure before deployment. Write the procedure to follow ahead of time to avoid errors when reacting under stress. It should include:

- The calls that initiate new contracts

- The keys' storage location and access method

- Deployment verification: develop and test a post-deployment script.

Delegatecall Proxy Pattern

The delegatecall opcode is a sharp tool that must be used carefully. Many high-profile exploits involve little-known edge cases and counter-intuitive aspects of the delegatecall proxy pattern. To aid the development of secure delegatecall proxies, utilize the slither-check-upgradability tool, which performs safety checks for both upgradable and immutable delegatecall proxies.

- Storage layout: Proxy and implementation storage layouts must be the same. Instead of defining the same state variables for each contract, both should inherit all state variables from a shared base contract.

- Inheritance: Be aware that the order of inheritance affects the final storage layout. For example,

contract A is B, Candcontract A is C, Bwill not have the same storage layout if both B and C define state variables. - Initialization: Immediately initialize the implementation. Well-known disasters (and near disasters) have featured an uninitialized implementation contract. A factory pattern can help ensure correct deployment and initialization and reduce front-running risks.

- Function shadowing: If the same method is defined on the proxy and the implementation, then the proxy’s function will not be called. Be mindful of

setOwnerand other administration functions commonly found on proxies. - Direct implementation usage: Configure implementation state variables with values that prevent direct use, such as setting a flag during construction that disables the implementation and causes all methods to revert. This is particularly important if the implementation also performs delegatecall operations, as this may lead to unintended self-destruction of the implementation.

- Immutable and constant variables: These variables are embedded in the bytecode and can get out of sync between the proxy and implementation. If the implementation has an incorrect immutable variable, this value may still be used even if the same variable is correctly set in the proxy’s bytecode.

- Contract Existence Checks: All low-level calls, including delegatecall, return true for an address with empty bytecode. This can mislead callers into thinking a call performed a meaningful operation when it did not or cause crucial safety checks to be skipped. While a contract’s constructor runs, its bytecode remains empty until the end of execution. We recommend rigorously verifying that all low-level calls are protected against nonexistent contracts. Keep in mind that most proxy libraries (such as Openzeppelin's) do not automatically perform contract existence checks.

For more information on delegatecall proxies, consult our blog posts and presentations:

- Contract Upgradability Anti-Patterns: Describes the differences between downstream data contracts and delegatecall proxies with upstream data contracts and how these patterns affect upgradability.

- How the Diamond Standard Falls Short: Explores delegatecall risks that apply to all contracts, not just those following the diamond standard.

- Breaking Aave Upgradeability: Discusses a subtle problem we discovered in Aave

ATokencontracts, resulting from the interplay between delegatecall proxies, contract existence checks, and unsafe initialization. - Contract Upgrade Risks and Recommendations: A Trail of Bits talk on best practices for developing upgradable delegatecall proxies. The section starting at 5:49 describes general risks for non-upgradable proxies.

Implementation Guidelines

Aim for simplicity. Use the simplest solution that meets your needs. Any member of your team should understand your solution.

Function Composition

Design your codebase architecture to facilitate easy review and allow testing individual components:

- Divide the system's logic, either through multiple contracts or by grouping similar functions together (e.g. authentication, arithmetic).

- Write small functions with clear purposes.

Inheritance

- Keep inheritance manageable. Though inheritance can help divide logic you should aim to minimize the depth and width of the inheritance tree.

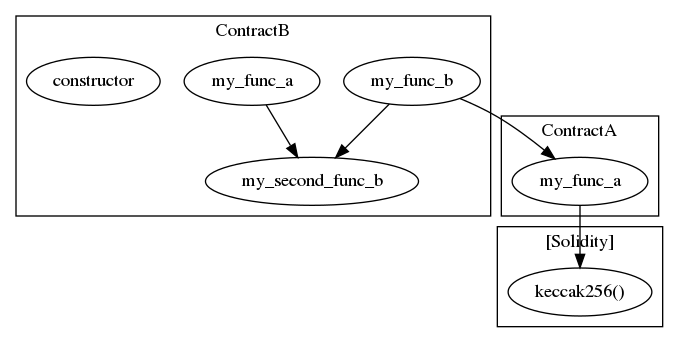

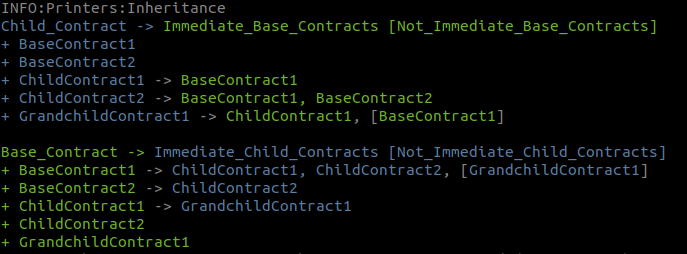

- Use Slither’s inheritance printer to check contract hierarchies. The inheritance printer can help review the hierarchy size.

Events

- Log all critical operations. Events facilitate contract debugging during development and monitoring after deployment.

Avoid Known Pitfalls

- Be aware of common security issues. Many online resources can help, such as Ethernaut CTF, Capture the Ether, and Not So Smart Contracts.

- Review the warnings sections in the Solidity documentation. These sections reveal non-obvious language behaviors.

Dependencies

- Use well-tested libraries. Importing code from well-tested libraries reduces the likelihood of writing buggy code. If writing an ERC20 contract, use OpenZeppelin.

- Use a dependency manager instead of copying and pasting code. Always keep external sources up-to-date.

Testing and Verification

- Create thorough unit tests. An extensive test suite is essential for developing high-quality software.

- Develop custom Slither and Echidna checks and properties. Automated tools help ensure contract security. Review the rest of this guide to learn how to write efficient checks and properties.

Solidity

- Favor Solidity versions outlined in our Slither Recommendations. We believe older Solidity versions are more secure and have better built-in practices. Newer versions may be too immature for production and need time to develop.

- Compile using a stable release, but check for warnings with the latest release. Verify that the latest compiler version reports no issues with your code. However, since Solidity has a fast release cycle and a history of compiler bugs, we do not recommend the newest version for deployment. See Slither’s solc version recommendation.

- Avoid inline assembly. Assembly requires EVM expertise. Do not write EVM code unless you have mastered the yellow paper.

Deployment Guidelines

After developing and deploying the contract:

- Monitor contracts. Observe logs and be prepared to respond in the event of contract or wallet compromise.

- Add contact information to blockchain-security-contacts. This list helps third parties notify you of discovered security flaws.

- Secure privileged users' wallets. Follow our best practices for hardware wallet key storage.

- Develop an incident response plan. Assume your smart contracts can be compromised. Possible threats include contract bugs or attackers gaining control of the contract owner's keys.

Token Integration Checklist

This checklist offers recommendations for interacting with arbitrary tokens. Ensure that every unchecked item is justified and that its risks are understood.

For convenience, all Slither utilities can be run directly on a token address, as shown below:

slither-check-erc 0xdac17f958d2ee523a2206206994597c13d831ec7 TetherToken --erc erc20

slither-check-erc 0x06012c8cf97BEaD5deAe237070F9587f8E7A266d KittyCore --erc erc721

Use the following Slither output for the token to follow this checklist:

- slither-check-erc [target] [contractName] [optional: --erc ERC_NUMBER]

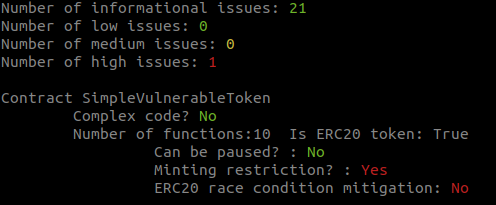

- slither [target] --print human-summary

- slither [target] --print contract-summary

- slither-prop . --contract ContractName # requires configuration, and use of Echidna and Manticore

General Considerations

- The contract has a security review. Avoid interacting with contracts that lack a security review. Assess the review's duration (i.e., the level of effort), the reputation of the security firm, and the number and severity of findings.

- You have contacted the developers. If necessary, alert their team to incidents. Locate appropriate contacts on blockchain-security-contacts.

- They have a security mailing list for critical announcements. Their team should advise users (like you!) on critical issues or when upgrades occur.

Contract Composition

-

The contract avoids unnecessary complexity. The token should be a simple contract; tokens with complex code require a higher standard of review. Use Slither’s

human-summaryprinter to identify complex code. -

The contract uses

SafeMath. Contracts that do not useSafeMathrequire a higher standard of review. Inspect the contract manually forSafeMathusage. -

The contract has only a few non-token-related functions. Non-token-related functions increase the likelihood of issues in the contract. Use Slither’s

contract-summaryprinter to broadly review the code used in the contract. -

The token has only one address. Tokens with multiple entry points for balance updates can break internal bookkeeping based on the address (e.g.,

balances[token_address][msg.sender]might not reflect the actual balance).

Owner Privileges

-

The token is not upgradeable. Upgradeable contracts may change their rules over time. Use Slither’s

human-summaryprinter to determine if the contract is upgradeable. -

The owner has limited minting capabilities. Malicious or compromised owners can abuse minting capabilities. Use Slither’s

human-summaryprinter to review minting capabilities and consider manually reviewing the code. - The token is not pausable. Malicious or compromised owners can trap contracts relying on pausable tokens. Identify pausable code manually.

- The owner cannot blacklist the contract. Malicious or compromised owners can trap contracts relying on tokens with a blacklist. Identify blacklisting features manually.

- The team behind the token is known and can be held responsible for abuse. Contracts with anonymous development teams or teams situated in legal shelters require a higher standard of review.

ERC20 Tokens

ERC20 Conformity Checks

Slither includes the slither-check-erc utility that checks a token's conformance to various ERC standards. Use slither-check-erc to review the following:

-

TransferandtransferFromreturn a boolean. Some tokens do not return a boolean for these functions, which may cause their calls in the contract to fail. -

The

name,decimals, andsymbolfunctions are present if used. These functions are optional in the ERC20 standard and may not be present. -

Decimalsreturns auint8. Some tokens incorrectly return auint256. In these cases, ensure the returned value is below 255. - The token mitigates the known ERC20 race condition. The ERC20 standard has a known race condition that must be mitigated to prevent attackers from stealing tokens.

Slither includes the slither-prop utility, which generates unit tests and security properties to find many common ERC flaws. Use slither-prop to review the following:

-

The contract passes all unit tests and security properties from

slither-prop. Run the generated unit tests, then check the properties with Echidna and Manticore.

Risks of ERC20 Extensions

The behavior of certain contracts may differ from the original ERC specification. Review the following conditions manually:

-

The token is not an ERC777 token and has no external function call in

transferortransferFrom. External calls in the transfer functions can lead to reentrancies. -

TransferandtransferFromshould not take a fee. Deflationary tokens can lead to unexpected behavior. - Consider any interest earned from the token. Some tokens distribute interest to token holders. If not taken into account, this interest may become trapped in the contract.

Token Scarcity

Token scarcity issues must be reviewed manually. Check for the following conditions:

- The supply is owned by more than a few users. If a few users own most of the tokens, they can influence operations based on the tokens' distribution.

- The total supply is sufficient. Tokens with a low total supply can be easily manipulated.

- The tokens are located in more than a few exchanges. If all tokens are in one exchange, compromising the exchange could compromise the contract relying on the token.

- Users understand the risks associated with large funds or flash loans. Contracts relying on the token balance must account for attackers with large funds or attacks executed through flash loans.

- The token does not allow flash minting. Flash minting can lead to drastic changes in balance and total supply, requiring strict and comprehensive overflow checks in the token operation.

Known non-standard ERC20 tokens

Protocols that allow integration with arbitrary tokens must take care to properly handle certain well-known non-standard ERC20 tokens. Refer to the non-standard-tokens list for a list of well-known tokens that contain additional risks.

ERC721 Tokens

ERC721 Conformity Checks

The behavior of certain contracts may differ from the original ERC specification. Review the following conditions manually:

- Transfers of tokens to the 0x0 address revert. Some tokens allow transfers to 0x0 and consider tokens sent to that address to have been burned; however, the ERC721 standard requires that such transfers revert.

-

safeTransferFromfunctions are implemented with the correct signature. Some token contracts do not implement these functions. Transferring NFTs to one of those contracts can result in a loss of assets. -

The

name,decimals, andsymbolfunctions are present if used. These functions are optional in the ERC721 standard and may not be present. -

If used,

decimalsreturns auint8(0). Other values are invalid. -

The

nameandsymbolfunctions can return an empty string. This behavior is allowed by the standard. -

The

ownerOffunction reverts if thetokenIdis invalid or refers to a token that has already been burned. The function cannot return 0x0. This behavior is required by the standard but may not always be implemented correctly. - A transfer of an NFT clears its approvals. This is required by the standard.

- The token ID of an NFT cannot be changed during its lifetime. This is required by the standard.

Common Risks of the ERC721 Standard

Mitigate the risks associated with ERC721 contracts by conducting a manual review of the following conditions:

-

The

onERC721Receivedcallback is taken into account. External calls in the transfer functions can lead to reentrancies, especially when the callback is not explicit (e.g., insafeMintcalls). -

When an NFT is minted, it is safely transferred to a smart contract. If a minting function exists, it should behave similarly to

safeTransferFromand handle the minting of new tokens to a smart contract properly, preventing asset loss. - Burning a token clears its approvals. If a burning function exists, it should clear the token’s previous approvals.

Known non-standard ERC20 tokens

The following tokens are known to be non-standard ERC20 tokens. They may have additional risks that must be covered.

Missing Revert

These tokens do not revert when a transfer fails, e.g. due to missing funds. Protocols that integrate these tokens must include a check for the transfer function's returned boolean success status and handle the failure case appropriately.

| Token | Notes |

|---|---|

| Basic Attention Token (BAT) | |

| Huobi Token (HT) | |

| Compound USD Coin (cUSDC) | |

| 0x Protocol Token (ZRX) |

Transfer Hooks

These tokens include ERC777-like transfer hooks. Protocols that interact with tokens that include transfer hooks must be extra careful to protect against reentrant calls when dealing with these tokens, because control is handed back to the caller upon transfer. This can also affect cross-protocol reentrant calls to view functions.

| Token | Notes |

|---|---|

| Amp (AMP) | |

| The Tokenized Bitcoin (imBTC) |

Missing Return Data / Transfer Success Status

These tokens do not return any data from the external call when transferring tokens. Protocols using an interface that specifies a return value when transferring tokens will revert. Solidity includes automatic checks on the return data size when decoding return values of an expected size.

| Token | Notes |

|---|---|

| Binance Coin (BNB) | Only missing return data on transfer. transferFrom returns true. |

| OMGToken (OMG) | |

| Tether USD (USDT) |

Permit No-op

Does not revert when calling permit. Protocols that use EIP-2612 permits should check that the token allowance has increased or is sufficient. See Multichain's incident.

| Token | Notes |

|---|---|

| Wrapped Ether (WETH) | Includes a non-reverting fallback function. |

Additional Non-standard Behavior

Additional non-standard token behavior that could be problematic includes:

- fee on transfers

- upgradeable contracts (USDC)

- tokens with multiple address entry-points to the same accounting state

- non-standard decimals (USDC: 6)

- non-standard permits (DAI)

- do not reduce allowance when it is the maximum value

- do not require allowance for transfers from self

- revert for approval of large amounts

>= 2^96 < 2^256 - 1(UNI, COMP)

Refer to d-xo/weird-erc20 for additional non-standard ERC20 tokens.

Incident Response Recommendations

How you respond during an incident is a direct reflection of your efforts to prepare for such an event. Each team or project's needs will vary so we provide the guidelines below as a starting point. Adherence to our guidelines can help you shift from a reactive approach to a proactive approach by planning with the assumption that incidents are inevitable. To fully leverage the following guidelines, consider them throughout the application development process.

Application Design

- Identify which components should or should not be:

- Pausable. While pausing a component can be beneficial during an incident, you must assess its potential impact on other contracts.

- Migratable or upgradeable. Discovering a bug might necessitate a migration strategy or contract upgrade to fix the issue; note, however, that upgradeability has its own sets of risks. Making all contracts upgradeable might not be the best approach.

- Decentralized. Using decentralized components can sometimes limit what rescue measures are possible and may require a higher amount of coordination.

- Begin to identify important system invariants. This helps to determine what you will need to monitor and what events may be necessary to do so effectively.

- Evaluate what additional events are needed. A missed event in a critical location might result in unnoticed incidents.

- Evaluate what components must be on-chain and off-chain. On-chain components are generally more at risk, but off-chain components push the risks to the off-chain owner.

- Use fine-grained access controls. Avoid setting all access controls to be available to an EOA. Opt for multisig wallets/MPC, and avoid delegating several roles to one address (e.g., the key responsible for setting fees shouldn't have access to the upgradeability feature).

Documentation

- Assemble a runbook of common actions you may need to perform. It's not possible or practical to exhaustively detail how you'll respond to every type of incident. But you can start to document procedures for some of the more important ones as well as actions that might be common across multiple scenarios (e.g., pausing, rotating owner keys, upgrading an implementation). This can also include scripts or snippets of code to facilitate performing these actions in a reproducible manner.

- Document how to interpret events emission. Only emitting events isn't sufficient; proper documentation is crucial, and users should be empowered to identify and decode them.

- Document how to access wallets. Clearly outline how to access wallets with special roles in the system. This should include both the location as well as access procedures for each wallet.

- Document the deployment and upgrade process. Deployment and upgrade processes are risky and must be thoroughly documented. This should include how to test the deployment/upgrade (e.g., using fork testing) and how to validate it (e.g., using a post-deployment script).

- Document how to contact users and external dependencies. Define guidelines regarding which stakeholders to contact, including the timing and mode of communication in case of incidents. The right communication at the right time is key to maintaining trust.

Process

- Conduct periodic training and incident response exercises. Regularly organize training sessions and incident response exercises. Such measures ensure that employees remain updated and can help highlight any flaws in the current incident response protocol.

- Remember to consider off-chain components when planning. While much of this document is concerned with on-chain code, compromised frontends or social media accounts are also common sources of incidents.

- Identify incident owners, with at least:

- A technical lead. Responsible for gathering and centralizing technical data.

- A communication lead. Tasked with internal and external communication.

- A legal lead. Either provides legal advice or ensures the right legal entities are contacted. It might also be worth considering liaison with appropriate law enforcement agencies.

- Use monitoring tools. You may opt for a third-party product, an in-house solution, or a combination of both. Third-party montoring will identify more generally suspicious transactions but may not be as in tune with system-specific metrics like health factors, collateralization ratios, or if an AMM invariant starts to drift. In-house monitoring, on the other hand, requires more engineering effort to setup and maintain, but can be tailored specifically to your needs.

- Carefully consider automating certain actions based on monitoring alerts. You may wish to automatically pause or move the system into a safer state if certain actvities are detected given how quickly some exploits are carried out. However, also keep in mind the impact and likelihood of a false positive triggering such a mechanism and how disruptive that could be.

Threat Intelligence

- Identify similar protocols, and stay informed of any issues affecting them. This could include forks, implementations on other chains, or protocols in the same general class (e.g., other lending protocols). Being aware of vulnerabilities in similar systems can help preemptively address potential threats in your own.

- Identify your dependencies, and follow their communication channels to be alerted in case of an issue. Follow their Twitter, Discord, Telegram, newsletter, etc. This includes both on-chain as well as off-chain (e.g., libraries, toolchain) dependencies.

- Maintain open communication lines with your dependencies' owners. This will help you to stay informed if one of your dependencies is compromised.

- Subscribe to the BlockThreat newsletter. BlockThreat will keep you informed about recent incidents and developments in blockchain security. The nature of blockchains means we have a culture of learning in the open so take advantage of this and learn from your peers.

Additionally, consider conducting a threat modeling exercise. This exercise will identify risks that an application faces at both the structural and operational level. If you're interested in undertaking such an exercise and would like to work with us, contact us.

Resources

- An Incident Response Plan for Startups

- A minimum viable incident response plan, a great starting point for a smaller team. Especially in combination with the Yearn example below, which is tailored a bit more for web3 teams.

- The practical guide to incident management

- An approachable guide for incident response. Chapter 4 includes examples for how to approach practicing your process.

- PagerDuty Incident Response

- A very detailed handbook of how PagerDuty handles incident response themselves. Some useful ideas and resources, but more practical for larger organizations.

- Emergency Procedures for Yearn Finance

- Rekt pilled: What to do when your dApp gets pwned and how to stay kalm - Heidi Wilder (DSS 2023)

- Crisis Handbook - Smart Contract Hack (SEAL)

Community Incident Retrospectives

Secure Development Workflow

Follow this high-level process while developing your smart contracts for enhanced security:

- Check for known security issues:

- Review your contracts using Slither, which has over 70 built-in detectors for common vulnerabilities. Run it on every check-in with new code and ensure it gets a clean report (or use triage mode to silence certain issues).

- Consider special features of your contract:

-

If your contracts are upgradeable, review your upgradeability code for flaws using

slither-check-upgradeabilityor Crytic. We have documented 17 ways upgrades can go sideways. -

If your contracts claim to conform to ERCs, check them with

slither-check-erc. This tool instantly identifies deviations from six common specs. -

If you have unit tests in Truffle, enrich them with

slither-prop. It automatically generates a robust suite of security properties for features of ERC20 based on your specific code. - If you integrate with third-party tokens, review our token integration checklist before relying on external contracts.

- Visually inspect critical security features of your code:

- Review Slither's inheritance-graph printer to avoid inadvertent shadowing and C3 linearization issues.

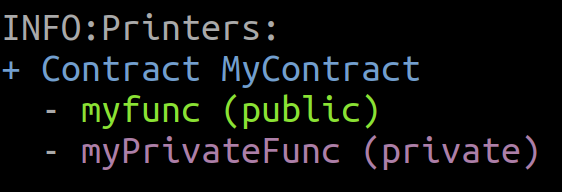

- Review Slither's function-summary printer, which reports function visibility and access controls.

- Review Slither's vars-and-auth printer, which reports access controls on state variables.

- Document critical security properties and use automated test generators to evaluate them:

- Learn to document security properties for your code. Although challenging at first, it is the single most important activity for achieving a good outcome. It is also a prerequisite for using any advanced techniques in this tutorial.

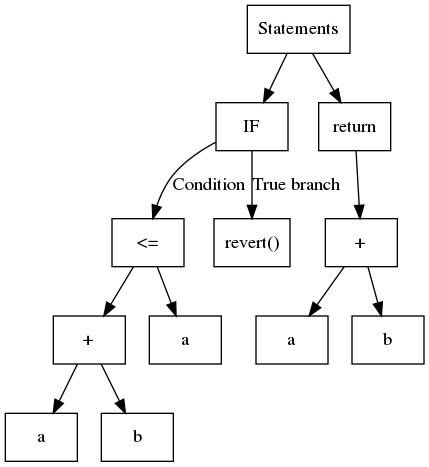

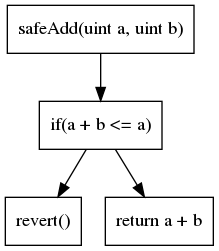

- Define security properties in Solidity for use with Echidna and Manticore. Focus on your state machine, access controls, arithmetic operations, external interactions, and standards conformance.

- Define security properties with Slither's Python API. Concentrate on inheritance, variable dependencies, access controls, and other structural issues.

- Be mindful of issues that automated tools cannot easily find:

- Lack of privacy: Transactions are visible to everyone else while queued in the pool.

- Front running transactions.

- Cryptographic operations.

- Risky interactions with external DeFi components.

Ask for help

Office Hours are held every Tuesday afternoon. These one-hour, one-on-one sessions provide an opportunity to ask questions about security, troubleshoot tool usage, and receive expert feedback on your current approach. We will help you work through this guide.

Join our Slack: Empire Hacking. We are always available in the #crytic and #ethereum channels if you have questions.

Security is about more than just smart contracts

Review our quick tips for general application and corporate security. While it is crucial to ensure on-chain code security, off-chain security lapses can be equally severe, especially regarding owner keys.

How to prepare for a security review

Get ready for your security review! Ensuring a few key elements are in place before the review starts can make the process significantly smoother for both sides.

Set a goal for the review

This is the most important step of a security review, and paradoxically the one most often overlooked. You should have an idea of what kind of questions you want answered, such as:

- What’s the overall level of security for this product?

- What are the areas that you are the most concerns about?

- Take into considerations previous audits and issues, complex parts, and fragile components.

- What is the worst case scenario for your project?

Knowing your biggest area of concern will help the assessment team tailor their approach to meet your needs.

Resolve the easy issues

Handing the code off to the assessment team is a lot like releasing the product: the cleaner the code, the better everything will go. To that end:

- Triage all results from static analysis tools. Go after the low-hanging fruits and use:

- Slither for Solidity codebases

- dylint for Rust codebases

- golangci for Go codebases

- CodeQL and Semgrep for Go/Rust/C++/... codebases

- Increase unit and feature test coverage. Ideally this has been part of the development process, but everyone slips up, tests don’t get updated, or new features don’t quite match the old integrations tests. Now is the time to update the tests and run them all.

- Remove dead code, unused libraries, and other extraneous weight. You may know which is unused but the consultants won’t and will waste time investigating it for potential issues. The same goes for that new feature that hasn’t seen progress in months, or that third-party library that doesn’t get used anymore.

Ensure the code is accessible

Making the code accessible and clearly identified will avoid wasting ressources from the security engineers.

- Provide a detailed list of files for review.. This will avoid confusion if your codebase is large and some elements are not meant to be in scope.

- Create a clear set of build instructions, and confirm the setup by cloning and testing your repository on a fresh environment. A code that cannot be built is a code more difficult to review.

- Freeze a stable commit hash, branch, or release prior to review. Working on a moving target makes the review more difficult

- Identify boilerplates, dependencies and difference from forked code. By highliting what code you wrote, you will help keeping the review focused

Document, Document, Document

Streamline the revuew process of building a mental model of your codebase by providing comprehensive documentation.

- Create flowcharts and sequence diagrams to depict primary workflows. They will help identify the components and their relationships

- Write users stories. Having users stories is a powerful tool to explain a project

- Outline the on-chain / off-chain assumptions. This includes:

- Data validation procedure

- Oracles information

- Bridges assumptions

- List actors and with their respective roles and privileges. The complexity of a system grows with its number of actors.

- Incorporate external developer documentation that links directly to your code. This will help to ensure the documentation is up to date with the code

- Add function documentation and inline comments for complex areas of your system. Code documentation should include:

- System and function level invariants

- Parameter ranges (minimum and maximum values) used in your system.

- Arithmetic formula: how they map to their specification, and their precision loss exceptations

- Compile a glossary for consistent terminology use. You use your codebase every day and you are familar with the terminology - a new person looking at your code is not

- Consider creating short video walkthroughs for complex workflows or areas of concern. Video walkthroughs is a great format to share your knowledge

Learn EVM

List of EVM Technical Knowledge

- EVM Opcode Reference: Reference and notes for each of the EVM opcodes

- Transaction Tracing: Helper scripts and guidance for generating and navigating transaction traces

- Arithmetic Checks: Guide to performing arithmetic checks in the EVM

- Yellow Paper Guidance: Symbol reference for more easily reading the Ethereum yellow paper

- Forks <> EIPs: Summarizes the EIPs included in each fork

- Forks <> CIPs: Summarizes the CIPs and EIPs included in each Celo fork (EVM-compatible chain)

- Upgrades <> TIPs: Summarizes the TIPs included in each TRON upgrade (EVM-compatible chain)

- Forks <> BEPs: Summarizes the BEPs included in each BSC fork (EVM-compatible chain)

Ethereum VM (EVM) Opcodes and Instruction Reference

This reference consolidates EVM opcode information from the yellow paper, stack exchange, solidity source, parity source, evm-opcode-gas-costs and Manticore.

Notes

The size of a "word" in EVM is 256 bits.

The gas information is a work in progress. If an asterisk is in the Gas column, the base cost is shown but may vary based on the opcode arguments.

Table

| Opcode | Name | Description | Extra Info | Gas |

|---|---|---|---|---|

0x00 | STOP | Halts execution | - | 0 |

0x01 | ADD | Addition operation | - | 3 |

0x02 | MUL | Multiplication operation | - | 5 |

0x03 | SUB | Subtraction operation | - | 3 |

0x04 | DIV | Integer division operation | - | 5 |

0x05 | SDIV | Signed integer division operation (truncated) | - | 5 |

0x06 | MOD | Modulo remainder operation | - | 5 |

0x07 | SMOD | Signed modulo remainder operation | - | 5 |

0x08 | ADDMOD | Modulo addition operation | - | 8 |

0x09 | MULMOD | Modulo multiplication operation | - | 8 |

0x0a | EXP | Exponential operation | - | 10* |

0x0b | SIGNEXTEND | Extend length of two's complement signed integer | - | 5 |

0x0c - 0x0f | Unused | Unused | - | |

0x10 | LT | Less-than comparison | - | 3 |

0x11 | GT | Greater-than comparison | - | 3 |

0x12 | SLT | Signed less-than comparison | - | 3 |

0x13 | SGT | Signed greater-than comparison | - | 3 |

0x14 | EQ | Equality comparison | - | 3 |

0x15 | ISZERO | Simple not operator | - | 3 |

0x16 | AND | Bitwise AND operation | - | 3 |

0x17 | OR | Bitwise OR operation | - | 3 |

0x18 | XOR | Bitwise XOR operation | - | 3 |

0x19 | NOT | Bitwise NOT operation | - | 3 |

0x1a | BYTE | Retrieve single byte from word | - | 3 |

0x1b | SHL | Shift Left | EIP145 | 3 |

0x1c | SHR | Logical Shift Right | EIP145 | 3 |

0x1d | SAR | Arithmetic Shift Right | EIP145 | 3 |

0x20 | KECCAK256 | Compute Keccak-256 hash | - | 30* |

0x21 - 0x2f | Unused | Unused | ||

0x30 | ADDRESS | Get address of currently executing account | - | 2 |

0x31 | BALANCE | Get balance of the given account | - | 700 |

0x32 | ORIGIN | Get execution origination address | - | 2 |

0x33 | CALLER | Get caller address | - | 2 |

0x34 | CALLVALUE | Get deposited value by the instruction/transaction responsible for this execution | - | 2 |

0x35 | CALLDATALOAD | Get input data of current environment | - | 3 |

0x36 | CALLDATASIZE | Get size of input data in current environment | - | 2* |

0x37 | CALLDATACOPY | Copy input data in current environment to memory | - | 3 |

0x38 | CODESIZE | Get size of code running in current environment | - | 2 |

0x39 | CODECOPY | Copy code running in current environment to memory | - | 3* |

0x3a | GASPRICE | Get price of gas in current environment | - | 2 |

0x3b | EXTCODESIZE | Get size of an account's code | - | 700 |

0x3c | EXTCODECOPY | Copy an account's code to memory | - | 700* |

0x3d | RETURNDATASIZE | Pushes the size of the return data buffer onto the stack | EIP 211 | 2 |

0x3e | RETURNDATACOPY | Copies data from the return data buffer to memory | EIP 211 | 3 |

0x3f | EXTCODEHASH | Returns the keccak256 hash of a contract's code | EIP 1052 | 700 |

0x40 | BLOCKHASH | Get the hash of one of the 256 most recent complete blocks | - | 20 |

0x41 | COINBASE | Get the block's beneficiary address | - | 2 |

0x42 | TIMESTAMP | Get the block's timestamp | - | 2 |

0x43 | NUMBER | Get the block's number | - | 2 |

0x44 | DIFFICULTY | Get the block's difficulty | - | 2 |

0x45 | GASLIMIT | Get the block's gas limit | - | 2 |

0x46 | CHAINID | Returns the current chain’s EIP-155 unique identifier | EIP 1344 | 2 |

0x47 | SELFBALANCE | Returns the balance of the currently executing account | - | 5 |

0x48 | BASEFEE | Returns the value of the base fee of the current block it is executing in. | EIP 3198 | 2 |

0x49 | BLOBHASH | Returns the transaction blob versioned hash at the given index, or 0 if the index is greater than the number of versioned hashes | EIP-4844 | 3 |

0x4a | BLOBBASEFEE | Returns the value of the blob base fee of the current block it is executing in | EIP-7516 | 2 |

0x4b - 0x4f | Unused | - | ||

0x50 | POP | Remove word from stack | - | 2 |

0x51 | MLOAD | Load word from memory | - | 3* |

0x52 | MSTORE | Save word to memory | - | 3* |

0x53 | MSTORE8 | Save byte to memory | - | 3 |

0x54 | SLOAD | Load word from storage | - | 800 |

0x55 | SSTORE | Save word to storage | - | 20000** |

0x56 | JUMP | Alter the program counter | - | 8 |

0x57 | JUMPI | Conditionally alter the program counter | - | 10 |

0x58 | PC | Get the value of the program counter prior to the increment | - | 2 |

0x59 | MSIZE | Get the size of active memory in bytes | - | 2 |

0x5a | GAS | Get the amount of available gas, including the corresponding reduction for the cost of this instruction | - | 2 |

0x5b | JUMPDEST | Mark a valid destination for jumps | - | 1 |

0x5c | TLOAD | Load word from transient storage | EIP-1153 | 100 |

0x5d | TSTORE | Save word to transient storage | EIP-1153 | 100 |

0x5e | MCOPY | Copy memory from one area to another | EIP-5656 | 3+3*words* |

0x5f | PUSH0 | Place the constant value 0 on stack | EIP-3855 | 2 |

0x60 | PUSH1 | Place 1 byte item on stack | - | 3 |

0x61 | PUSH2 | Place 2-byte item on stack | - | 3 |

0x62 | PUSH3 | Place 3-byte item on stack | - | 3 |

0x63 | PUSH4 | Place 4-byte item on stack | - | 3 |

0x64 | PUSH5 | Place 5-byte item on stack | - | 3 |

0x65 | PUSH6 | Place 6-byte item on stack | - | 3 |

0x66 | PUSH7 | Place 7-byte item on stack | - | 3 |

0x67 | PUSH8 | Place 8-byte item on stack | - | 3 |

0x68 | PUSH9 | Place 9-byte item on stack | - | 3 |

0x69 | PUSH10 | Place 10-byte item on stack | - | 3 |

0x6a | PUSH11 | Place 11-byte item on stack | - | 3 |

0x6b | PUSH12 | Place 12-byte item on stack | - | 3 |

0x6c | PUSH13 | Place 13-byte item on stack | - | 3 |

0x6d | PUSH14 | Place 14-byte item on stack | - | 3 |

0x6e | PUSH15 | Place 15-byte item on stack | - | 3 |

0x6f | PUSH16 | Place 16-byte item on stack | - | 3 |

0x70 | PUSH17 | Place 17-byte item on stack | - | 3 |

0x71 | PUSH18 | Place 18-byte item on stack | - | 3 |

0x72 | PUSH19 | Place 19-byte item on stack | - | 3 |

0x73 | PUSH20 | Place 20-byte item on stack | - | 3 |

0x74 | PUSH21 | Place 21-byte item on stack | - | 3 |

0x75 | PUSH22 | Place 22-byte item on stack | - | 3 |

0x76 | PUSH23 | Place 23-byte item on stack | - | 3 |

0x77 | PUSH24 | Place 24-byte item on stack | - | 3 |

0x78 | PUSH25 | Place 25-byte item on stack | - | 3 |

0x79 | PUSH26 | Place 26-byte item on stack | - | 3 |

0x7a | PUSH27 | Place 27-byte item on stack | - | 3 |

0x7b | PUSH28 | Place 28-byte item on stack | - | 3 |

0x7c | PUSH29 | Place 29-byte item on stack | - | 3 |

0x7d | PUSH30 | Place 30-byte item on stack | - | 3 |

0x7e | PUSH31 | Place 31-byte item on stack | - | 3 |

0x7f | PUSH32 | Place 32-byte (full word) item on stack | - | 3 |

0x80 | DUP1 | Duplicate 1st stack item | - | 3 |

0x81 | DUP2 | Duplicate 2nd stack item | - | 3 |

0x82 | DUP3 | Duplicate 3rd stack item | - | 3 |

0x83 | DUP4 | Duplicate 4th stack item | - | 3 |

0x84 | DUP5 | Duplicate 5th stack item | - | 3 |

0x85 | DUP6 | Duplicate 6th stack item | - | 3 |

0x86 | DUP7 | Duplicate 7th stack item | - | 3 |

0x87 | DUP8 | Duplicate 8th stack item | - | 3 |

0x88 | DUP9 | Duplicate 9th stack item | - | 3 |

0x89 | DUP10 | Duplicate 10th stack item | - | 3 |

0x8a | DUP11 | Duplicate 11th stack item | - | 3 |

0x8b | DUP12 | Duplicate 12th stack item | - | 3 |

0x8c | DUP13 | Duplicate 13th stack item | - | 3 |

0x8d | DUP14 | Duplicate 14th stack item | - | 3 |

0x8e | DUP15 | Duplicate 15th stack item | - | 3 |

0x8f | DUP16 | Duplicate 16th stack item | - | 3 |

0x90 | SWAP1 | Exchange 1st and 2nd stack items | - | 3 |

0x91 | SWAP2 | Exchange 1st and 3rd stack items | - | 3 |

0x92 | SWAP3 | Exchange 1st and 4th stack items | - | 3 |

0x93 | SWAP4 | Exchange 1st and 5th stack items | - | 3 |

0x94 | SWAP5 | Exchange 1st and 6th stack items | - | 3 |

0x95 | SWAP6 | Exchange 1st and 7th stack items | - | 3 |

0x96 | SWAP7 | Exchange 1st and 8th stack items | - | 3 |

0x97 | SWAP8 | Exchange 1st and 9th stack items | - | 3 |

0x98 | SWAP9 | Exchange 1st and 10th stack items | - | 3 |

0x99 | SWAP10 | Exchange 1st and 11th stack items | - | 3 |

0x9a | SWAP11 | Exchange 1st and 12th stack items | - | 3 |

0x9b | SWAP12 | Exchange 1st and 13th stack items | - | 3 |

0x9c | SWAP13 | Exchange 1st and 14th stack items | - | 3 |

0x9d | SWAP14 | Exchange 1st and 15th stack items | - | 3 |

0x9e | SWAP15 | Exchange 1st and 16th stack items | - | 3 |

0x9f | SWAP16 | Exchange 1st and 17th stack items | - | 3 |

0xa0 | LOG0 | Append log record with no topics | - | 375 |

0xa1 | LOG1 | Append log record with one topic | - | 750 |

0xa2 | LOG2 | Append log record with two topics | - | 1125 |

0xa3 | LOG3 | Append log record with three topics | - | 1500 |

0xa4 | LOG4 | Append log record with four topics | - | 1875 |

0xa5 - 0xaf | Unused | - | ||

0xb0 | JUMPTO | Tentative libevmasm has different numbers | EIP 615 | |

0xb1 | JUMPIF | Tentative | EIP 615 | |

0xb2 | JUMPSUB | Tentative | EIP 615 | |

0xb4 | JUMPSUBV | Tentative | EIP 615 | |

0xb5 | BEGINSUB | Tentative | EIP 615 | |

0xb6 | BEGINDATA | Tentative | EIP 615 | |

0xb8 | RETURNSUB | Tentative | EIP 615 | |

0xb9 | PUTLOCAL | Tentative | EIP 615 | |

0xba | GETLOCAL | Tentative | EIP 615 | |

0xbb - 0xe0 | Unused | - | ||

0xe1 | SLOADBYTES | Only referenced in pyethereum | - | - |

0xe2 | SSTOREBYTES | Only referenced in pyethereum | - | - |

0xe3 | SSIZE | Only referenced in pyethereum | - | - |

0xe4 - 0xef | Unused | - | ||

0xf0 | CREATE | Create a new account with associated code | - | 32000 |

0xf1 | CALL | Message-call into an account | - | Complicated |

0xf2 | CALLCODE | Message-call into this account with alternative account's code | - | Complicated |

0xf3 | RETURN | Halt execution returning output data | - | 0 |

0xf4 | DELEGATECALL | Message-call into this account with an alternative account's code, but persisting into this account with an alternative account's code | - | Complicated |

0xf5 | CREATE2 | Create a new account and set creation address to sha3(sender + sha3(init code)) % 2**160 | - | |

0xf6 - 0xf9 | Unused | - | - | |

0xfa | STATICCALL | Similar to CALL, but does not modify state | - | 40 |

0xfb | Unused | - | - | |

0xfd | REVERT | Stop execution and revert state changes, without consuming all provided gas and providing a reason | - | 0 |

0xfe | INVALID | Designated invalid instruction | - | 0 |

0xff | SELFDESTRUCT | Sends all ETH to the target. If executed in the same transaction a contract was created, register the account for later deletion | EIP-6780 | 5000* |

Instruction Details

STOP

0x00

() => ()

halts execution

ADD

0x01

Takes two words from stack, adds them, then pushes the result onto the stack.

(a, b) => (c)

c = a + b

MUL

0x02

(a, b) => (c)

c = a * b

SUB

0x03

(a, b) => (c)

c = a - b

DIV

0x04

(a, b) => (c)

c = a / b

SDIV

0x05

(a: int256, b: int256) => (c: int256)

c = a / b

MOD

0x06

(a, b) => (c)

c = a % b

SMOD

0x07

(a: int256, b: int256) => (c: int256)

c = a % b

ADDMOD

0x08

(a, b, m) => (c)

c = (a + b) % m

MULMOD

0x09

(a, b, m) => (c)

c = (a * b) % m

EXP

0x0a

(a, b, m) => (c)

c = (a * b) % m

SIGNEXTEND

0x0b

(b, x) => (y)

y = SIGNEXTEND(x, b)

sign extends x from (b + 1) * 8 bits to 256 bits.

LT

0x10

(a, b) => (c)

c = a < b

all values interpreted as uint256

GT

0x11

(a, b) => (c)

c = a > b

all values interpreted as uint256

SLT

0x12

(a, b) => (c)

c = a < b

all values interpreted as int256

SGT

0x13

(a, b) => (c)

c = a > b

all values interpreted as int256

EQ

0x14

Pops 2 elements off the stack and pushes the value 1 to the stack in case they're equal, otherwise the value 0.

(a, b) => (c)

c = a == b

ISZERO

0x15

(a) => (c)

c = a == 0

AND

0x16

(a, b) => (c)

c = a & b

OR

0x17

(a, b) => (c)

c = a | b

XOR

0x18

(a, b) => (c)

c = a ^ b

NOT

0x19

(a) => (c)

c = ~a

BYTE

0x1a

(i, x) => (y)

y = (x >> (248 - i * 8) & 0xff

SHL

0x1b

Pops 2 elements from the stack and pushes the second element onto the stack shifted left by the shift amount (first element).

(shift, value) => (res)

res = value << shift

SHR

0x1c

Pops 2 elements from the stack and pushes the second element onto the stack shifted right by the shift amount (first element).

(shift, value) => (res)

res = value >> shift

SAR

0x1d

(shift, value) => (res)

res = value >> shift

value: int256

KECCAK256

0x20

(offset, len) => (hash)

hash = keccak256(memory[offset:offset+len])

ADDRESS

0x30

() => (address(this))

BALANCE

0x31

() => (address(this).balance)

ORIGIN

0x32

() => (tx.origin)

CALLER

0x33

() => (msg.sender)

CALLVALUE

0x34

() => (msg.value)

CALLDATALOAD

0x35

(index) => (msg.data[index:index+32])

CALLDATASIZE

0x36

() => (msg.data.size)

CALLDATACOPY

0x37

(memOffset, offset, length) => ()

memory[memOffset:memOffset+len] = msg.data[offset:offset+len]

CODESIZE

0x38

() => (address(this).code.size)

CODECOPY

0x39

(memOffset, codeOffset, len) => ()

memory[memOffset:memOffset+len] = address(this).code[codeOffset:codeOffset+len]

GASPRICE

0x3a

() => (tx.gasprice)

EXTCODESIZE

0x3b

(addr) => (address(addr).code.size)

EXTCODECOPY

0x3c

(addr, memOffset, offset, length) => ()

memory[memOffset:memOffset+len] = address(addr).code[codeOffset:codeOffset+len]

RETURNDATASIZE

0x3d

() => (size)

size = RETURNDATASIZE()

The number of bytes that were returned from the last ext call

RETURNDATACOPY

0x3e

(memOffset, offset, length) => ()

memory[memOffset:memOffset+len] = RETURNDATA[codeOffset:codeOffset+len]

RETURNDATA is the data returned from the last external call

EXTCODEHASH

0x3f

(addr) => (hash)

hash = address(addr).exists ? keccak256(address(addr).code) : 0

BLOCKHASH

0x40

(number) => (hash)

hash = block.blockHash(number)

COINBASE

0x41

() => (block.coinbase)

TIMESTAMP

0x42

() => (block.timestamp)

NUMBER

0x43

() => (block.number)

DIFFICULTY

0x44

() => (block.difficulty)

GASLIMIT

0x45

() => (block.gaslimit)

CHAINID

0x46

() => (chainid)

where chainid = 1 for mainnet & some other value for other networks

SELFBALANCE

0x47

() => (address(this).balance)

BASEFEE

0x48

() => (block.basefee)

current block's base fee (related to EIP1559)

BLOBHASH

0x49

(index) => (tx.blob_versioned_hashes[index])

the transaction blob versioned hash at the given index, or 0 if the index is greater than the number of versioned hashes (EIP-4844)

BLOBBASEFEE

0x4a

() => (block.blobbasefee)

current block's blob base fee (EIP-7516)

POP

0x50

(a) => ()

discards the top stack item

MLOAD

0x51

(offset) => (value)

value = memory[offset:offset+32]

MSTORE

0x52

Saves a word to the EVM memory. Pops 2 elements from stack - the first element being the word memory address where the saved value (second element popped from stack) will be stored.

(offset, value) => ()

memory[offset:offset+32] = value

MSTORE8

0x53

(offset, value) => ()

memory[offset:offset+32] = value & 0xff

SLOAD

0x54

Pops 1 element off the stack, that being the key which is the storage slot and returns the read value stored there.

(key) => (value)

value = storage[key]

SSTORE

0x55

Pops 2 elements off the stack, the first element being the key and the second being the value which is then stored at the storage slot represented from the first element (key).

(key, value) => ()

storage[key] = value

JUMP

0x56

(dest) => ()

pc = dest

JUMPI

0x57

Conditional - Pops 2 elements from the stack, the first element being the jump location and the second being the value 0 (false) or 1 (true). If the value’s 1 the PC will be altered and the jump executed. Otherwise, the value will be 0 and the PC will remain the same and execution unaltered.

(dest, cond) => ()

pc = cond ? dest : pc + 1

PC

0x58

() => (pc)

MSIZE

0x59

() => (memory.size)

GAS

0x5a

() => (gasRemaining)

not including the gas required for this opcode

JUMPDEST

0x5b

() => ()

noop, marks a valid jump destination

TLOAD

0x5c

Pops 1 element off the stack, that being the key which is the transient storage slot and returns the read value stored there (EIP-1153).

(key) => (value)

value = transient_storage[key]

TSTORE

0x5d